It was initiated in early Summer 2018 and went live in autumn that year. By December 2018 we got interested parties to join us (e.g. City of Vienna, Austrian Economic Chambers) We also participate in the ASI workgroup for Blockchains (AG 001.88).

In autumn 2019 it got renamed to "Austrian Public Services Blockchain"; only document hash values got written to it yet, but other data were planned, like public service root CAs (ones internal to Austria).

This blog post explains a few technical design decisions.

The design of the system is fairly straightforward - a small self-written REST-API as middleware, Multichain as Blockchain layer, and Tinc as a VPN between the nodes.

The REST-API provides the usual API endpoints:

- a POST with one or multiple hash values (including their algorithms) to write into the blockchain, returning a random record ID (24 hex digits) and a dictionary (because JSON!) giving the Blockchain(s) the record got written to along with the transaction ID and the ETA of the blockchain block in seconds;

- another GET to get a (limited) list of entries (typically the last hundred); this list is sorted by client, ie. if some administrative procedure writes data, the list will only include data from that endpoint.

- A GET to retrieve a specific record, identified by either the ID or one or more of the hash values; via an argument the client check can be overridden, so that any valid record can be validated (check by 3rd-party).

- Also, there's a file upload endpoint – but that's mostly for debugging resp. testing because it leaks the document to the blockchain server!

So, let's start the discussion here, and explain some decisions.

Why Multichain?

Because it's Open-Source and easy to install (a single binary to run – compare that to several docker containers for other products!); but the most important detail is that it basically provides a database: the JSON blobs in the transaction can be (node-locally) indexed via string keys, making lookup very fast and easy, and in newer versions the blobs can also be checked for correctness (via Javascript code that also gets registered and transported via the same blockchain).

The consensus algorithm doesn't waste energy like Proof-Of-Work blockchains – having a simple list of known participants with one or more private keys each is easy enough, and fits this usecase nicely. Multiple "streams" within one blockchain correspond roughly to multiple tables in a database; this way different data sets (see above) can be stored in a single instance, without creating any conflicts between record types.

As additional bonus point, you can run a "cold" instance that only signs your transactions with your private key(s); the "public" (online) instance then only has a key for connecting to the network, but cannot sign transactions itself – that's quite nice if you like more security.

Why a separate ID for each record, and not just using the document hash values or the transaction ID?

The document hash values need not be unique; people sending in PDF forms might mistakenly upload the original (empty) PDF form. Then the document and upload time wouldn't be unique any more! So when writing data we give out a separate ID; this is easy to index as well.

Wouldn't a name for each document be useful, and make it easier to find later on?

It might be easy to find - but there's a good chance that it leaks PII, and therefore we don't store the name.

Why are there multiple hash values and not just a single one?

Storing multiple hashes for a single document improves security; while hash collisions on MD5 and SHA1 are known already, getting a collision on both isn't feasible yet (right?). That said, we restrict the algorithms to ones with a result of 160bits or more, so SHA-1 and better are allowed. Also, there's an optional field for the file size (in bytes), which helps against length extension attacks.

Why is there a VPN in use? Isn't a blockchain "safe" by design?

Basically, yes. But to improve the security against hacking attempts we limit access to our partners for now.

What about unbounded data growth?

We anticipate that we need to switch between blockchain instances at some time - either due to switching products, switching between incompatible configurations of a product (eg., if Multichain considers going from SHA256 to SHA3), or even just because the current blockchain becomes "large enough" (as in time, disk space, etc.).

In that case the consortium can decide to do a roll-over:

- create a new blockchain in the same VPN,

- entangle it with the old chains by storing a few blockhashes cross-wise over a week or so;

- and finally just switch the writing process to the newer blockchain. (This also allows to revoke all write permissions (of all partners!) on the old blockchain, and/or to move it to some R/O media.)

The newly written records will simply return the other blockchain name with the transaction ID; and, if the consortium decides that old data can be dropped (say, after 30 years or so), the data directory gets deleted.

For that reason the internal blockchain instances have a date in the name, like APSBC-20191017.

As another interesting detail, our agreement also specifies that each document must include at least 128bit random data (eg. in an ID or some invisible field), so that nearly identical documents can't be guessed! Imagine notarizing training certificates – by modifying your own PDF to have your neighbor's name and various training dates you might find out whether they are certified as well; putting some random data in makes this infeasible.

A nice trick is to use the Blockchain's current block hash value; by embedding this one as "random" data you can also prove that the last edit must have occurred later than the referenced block, but earlier than the block the transaction got stored in - for automated document creation a nice, narrow interval of about a minute down to tens of seconds is very likely and a good achievement.

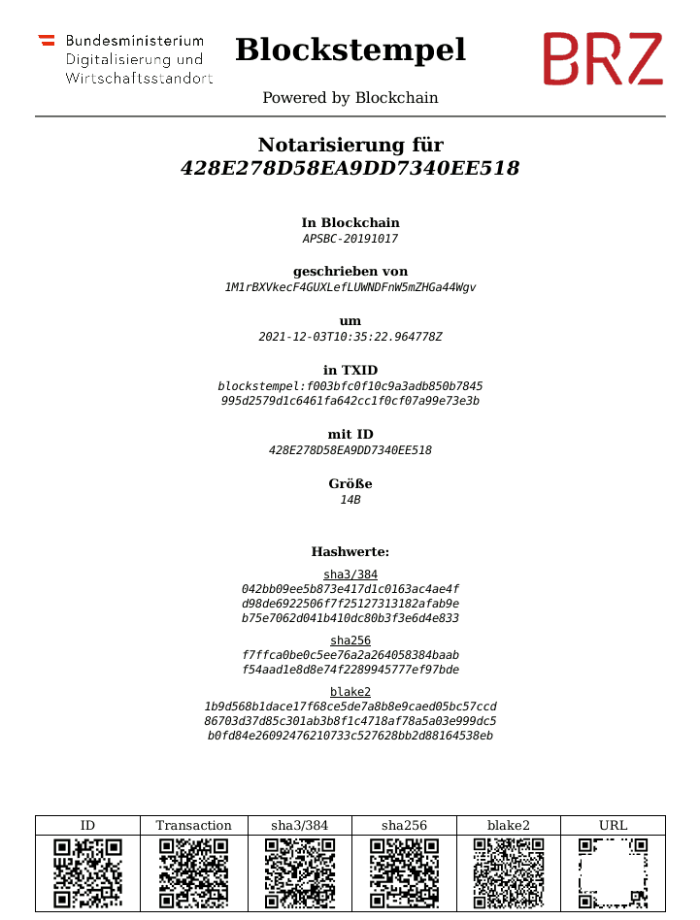

The last - but not least - tidbit is another GET endpoint in the server code that displays a nicely-formatted webpage of some specific record; it includes a few QR codes (to the URL, for the blockhash, the document hashes, and the record ID), and can be printed or converted to a PDF for later perusal.

For this example picture, I wrote my /etc/timezone to Blockstempel; the URL is redacted, as it wouldn't be publicly available anyway.